About the hackathon

Get early access to Hafnia's Vision-Language-Model (VLM)-as-a-Service!

The hackathon gives you access to NVIDIA's Cosmos-Reason1-7b model. Your mission:

- •Create innovative integrations with XProtect or third-party applications.

- •Leverage the VLM to enhance smart city solutions — using video understanding, summarization, and context to complement real-time analytics.

- •Build and submit your MVP demo for the chance to pitch live in Copenhagen at the Milestone Developer Summit (Nov 10–11).

What you get

Project Hafnia - Data & Services for Responsible Computer Vision

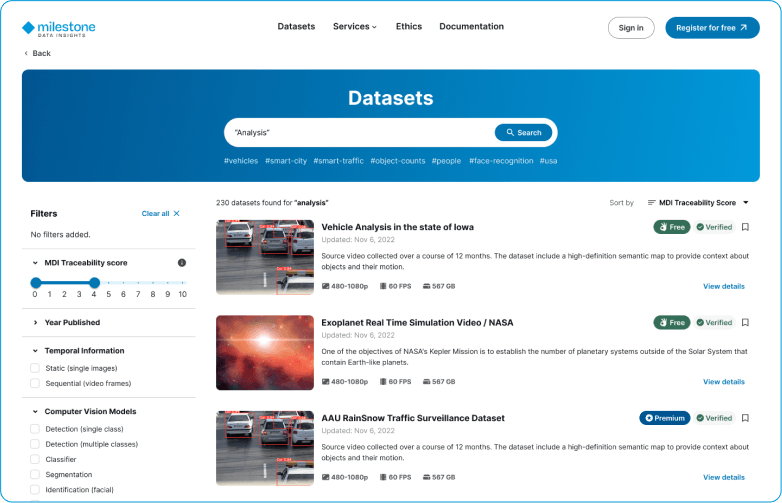

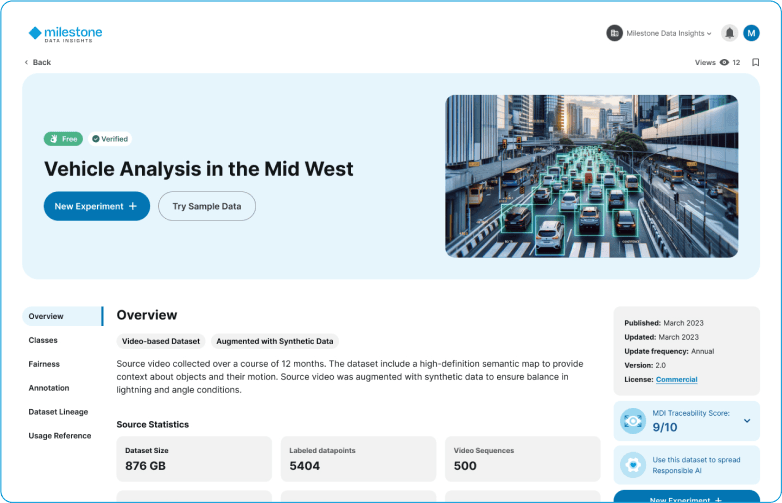

Project Hafnia is a compliant-first platform combining a curated Data Library with Training-aaS and VLM-aaS to speed computer vision development. Hafnia bridges the gap between data owners and AI developers — turning real-world video into compliant, high-performance training fuel for next-gen analytics. Available soon.

Data Library: Gain access and train your computer vision models on compliantly sourced real-world data. The data is curated, extensively annotated and anonymized.

Training-as-a-Service (TaaS): Train models that outperform others, and pass compliance audits. Simply write your training script, select your data and GPU and receive a new and improved model with metrics that matter!

VLM-as-a-Service (VLM-aaS): Built on NVIDIA's Cosmos-Reason1-7B and fine-tuned on compliantly sourced real-world traffic data for higher accuracy in complex road environment; for this hackathon, you'll use the base Cosmos-Reason1-7B (no fine-tuning).

Hackathon Prizes

Cash

€5000 for the first place, €3000 for the runner-up and €2000 for the third place

Promotion

Present your work to industry leaders, access Hafnia developer community, connect with social media influencers

Important dates

Hackathon duration 13 October - 11 November 2025

13/10

Hackathon registration opens!

23/10

Two webinar / Q&A sessions.

24/10

Hackathon submissions open.

3/11

Final submission deadline.

11/11

Presentations at Developer Summit and winners announced!

Judging criteria

Potential Value/Impact

Relevance to Smart City or surveillance use cases, with clear real-world applicability and potential to attract users, partners, or customers.

Creativity

Originality and innovative use of technology to solve practical problems with fresh, unique approaches.

Technological Execution

Strength of architecture and implementation - well-structured, reliable, and technically sound.

Functionality

Solution works as intended, scales effectively, and properly leverages available technology.

Demo Presentation

Clear, high-quality demo with strong overall impression and "wow" factor.

Participation Rules

Entrants must develop an integration of the Hafnia VLM-as-a-Service into a real-world application.

The integration should demonstrate how the VLM API can be used to enhance, for example Smart City, surveillance, or related use cases (e.g., validating real-time alerts with video clips, automating monitoring workflows, or improving decision-making through multimodal inputs).

Participants will receive tokens and access to the VLM API documentation and are expected to build a functional proof-of-concept that showcases a clear use case and value.

- Register online before the deadline

- Teams of up to 5 members

- Original projects only, built during hackathon

What to submit

Application

A working integration built using the VLM API, meeting the above requirements.

Text Description

Explanation of the solution’s features, functionality, and problem it addresses. Must specify how the VLM API was used and its role in the workflow.

Demonstration Video

- At least one video (minimum requirement) showing the integration in action.

- Should be less than three (3) minutes long.

- Must clearly demonstrate the application functioning as intended.

- Must be uploaded to YouTube or Vimeo with a public link provided.

Source Code (optional)

A link to a public GitHub repository including code, documentation, and setup instructions. Repositories should use an open-source license.

Live Demo Access (optional)

A link to a publicly accessible website, functioning demo, or deployed application. If access is restricted, provide login credentials in the submission instructions.

Documentation (optional)

Additional written documentation, README, or API usage notes that help judges understand and test the solution.

Judges

Magnus Blomkvist

DevRel Metropolis EMEA

Wilfried Rakow

Partner Developer Relations Manager

Sam Evans

AM Smart Spaces and Local Government EMEA

Edward Mauser

Director and Product - Lead Hafnia

Fulgencio Navarro

Head of Engineering and AI - Project Hafnia

Karolina Ziemenski

Senior AI Product Manager